学术讲座

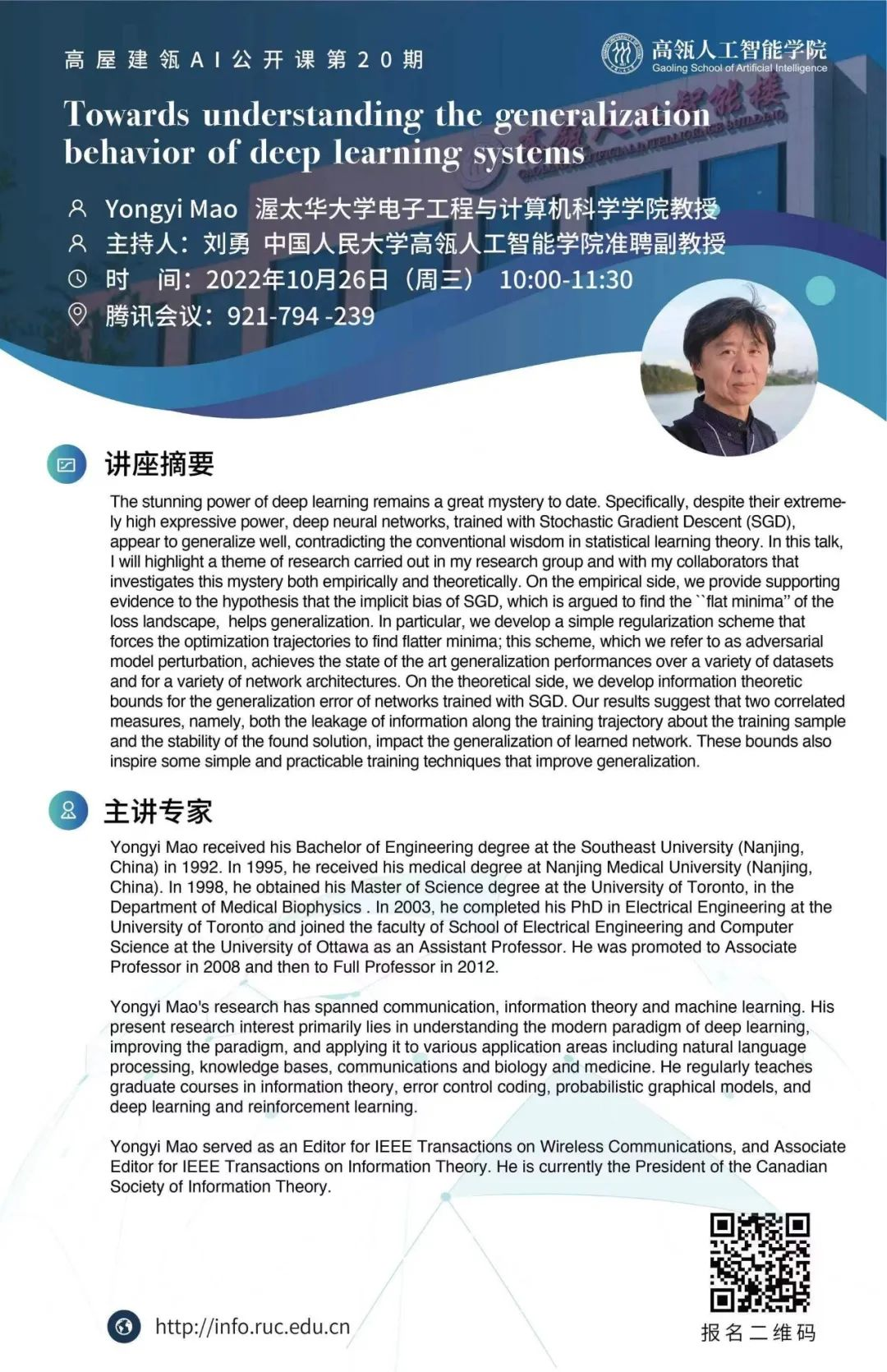

“高屋建瓴AI公开课”第20期:Towards understanding the generalization behavior of deep learning systems

日期:2022-10-26访问量:

报告题目:Towards understanding the generalization behavior of deep learning systems

讲座时间:10月26日(星期三)10:00-11:30

讲座摘要:The stunning power of deep learning remains a great mystery to date. Specifically, despite their extremely high expressive power, deep neural networks, trained with Stochastic Gradient Descent (SGD), appear to generalize well, contradicting the conventional wisdom in statistical learning theory. In this talk, I will highlight a theme of research carried out in my research group and with my collaborators that investigates this mystery both empirically and theoretically. On the empirical side, we provide supporting evidence to the hypothesis that the implicit bias of SGD, which is argued to find the ``flat minima’’ of the loss landscape, helps generalization. In particular, we develop a simple regularization scheme that forces the optimization trajectories to find flatter minima; this scheme, which we refer to as adversarial model perturbation, achieves the state of the art generalization performances over a variety of datasets and for a variety of network architectures. On the theoretical side, we develop information theoretic bounds for the generalization error of networks trained with SGD. Our results suggest that two correlated measures, namely, both the leakage of information along the training trajectory about the training sample and the stability of the found solution, impact the generalization of learned network. These bounds also inspire some simple and practicable training techniques that improve generalization.

The talk is based on the following works.

Yaowei Zheng, Richong Zhang, and Yongyi Mao, “Regularizing Neural Networks via Adversarial Model Perturbation’’, CVPR 2021

Ziqiao Wang and Yongyi Mao, “On the Generalization of Models Trained with SGD: Information-theoretic Bounds and Implications’’, ICLR 2022

Ziqiao Wang and Yongyi Mao, “Two Facets of SDE Under an Information-Theoretic Lens: Generalization of SGD via Training Trajectories and via Terminal States’’, Submitted, 2022

主讲专家

Yongyi Mao于1992年在东南大学(中国南京)获得工程学士学位。1995年,他在南京医科大学(中国南京)获得医学学位。1998年,他在多伦多大学医学生物物理学系获得理学硕士学位。2003年,他在多伦多大学获得了电子工程博士学位,并加入渥太华大学电子工程和计算机科学学院,担任助理教授。2008年他晋升为副教授,2012年他晋升为正教授。他的研究方向包括通信、信息论和机器学习。他目前的研究兴趣主要在于理解和改进深度学习的现代范式,并将该范式应用于多个领域,包括自然语言处理、知识库、通信、生物和医学。他教授信息论、纠错编码、概率图模型、深度学习及强化学习方面的研究生课程。他曾担任IEEE Transactions on Wireless Communications的编辑,以及IEEE Transactions on Information Theory的副编辑。目前任加拿大信息论学会主席。

项目背景

“高屋建瓴AI公开课”项目由中国人民大学高瓴人工智能学院发起,旨在扩大人工智能学科影响力、提升学科发展水准。公开课项目命名为“高屋建瓴”,寓意在高瓴人工智能学院的平台上,汇聚高端人才,发出人工智能研究方向高瞻远瞩的声音。

检测到您当前使用浏览器版本过于老旧,会导致无法正常浏览网站;请您使用电脑里的其他浏览器如:360、QQ、搜狗浏览器的速模式浏览,或者使用谷歌、火狐等浏览器。

下载Firefox

下载Firefox