学术讲座

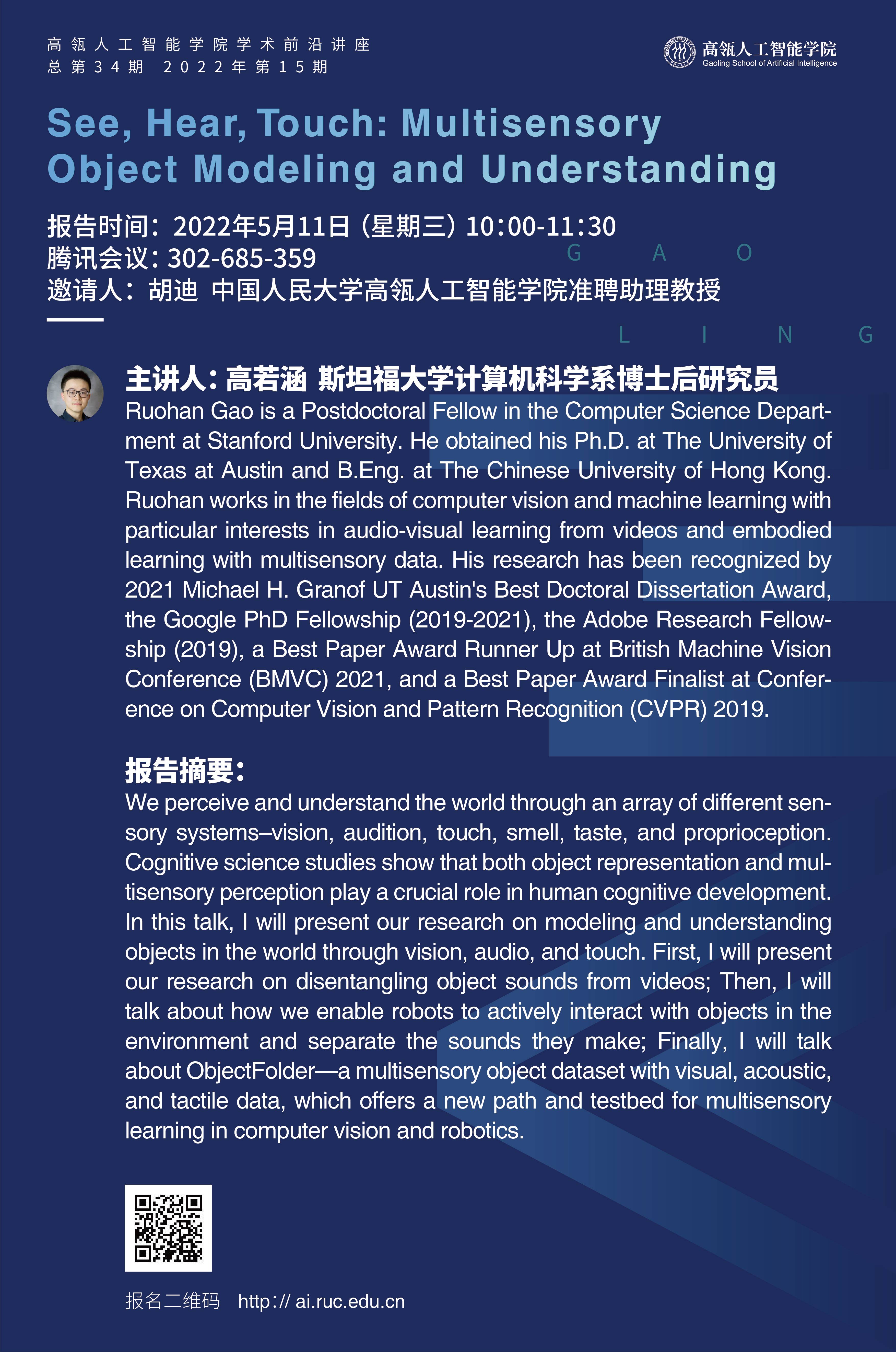

学术前沿讲座2022年第15期:See, Hear, Touch: Multisensory Object Modeling and Understanding

日期:2022-05-11访问量:

报告时间: 2022年5月11日 (星期三) 10:00-11:30

腾讯会议: 302-685-359

邀请人: 胡迪 中国人民大学高瓴人工智能学院准聘助理教授

主讲人姓名: 高若涵 斯坦福大学计算机科学系博士后研究员

主讲人简介: Ruohan Gao is a Postdoctoral Fellow in the Computer Science Department at Stanford University. He obtained his Ph.D. at The University of Texas at Austin and B.Eng. at The Chinese University of Hong Kong. Ruohan works in the fields of computer vision and machine learning with particular interests in audio-visual learning from videos and embodied learning with multisensory data. His research has been recognized by 2021 Michael H. Granof UT Austin's Best Doctoral Dissertation Award, the Google PhD Fellowship (2019-2021), the Adobe Research Fellowship (2019), a Best Paper Award Runner Up at British Machine Vision Conference (BMVC) 2021, and a Best Paper Award Finalist at Conference on Computer Vision and Pattern Recognition (CVPR) 2019.

报告题目: See, Hear, Touch: Multisensory Object Modeling and Understanding

报告摘要: We perceive and understand the world through an array of different sensory systems–vision, audition, touch, smell, taste, and proprioception. Cognitive science studies show that both object representation and multisensory perception play a crucial role in human cognitive development. In this talk, I will present our research on modeling and understanding objects in the world through vision, audio, and touch. First, I will present our research on disentangling object sounds from videos; Then, I will talk about how we enable robots to actively interact with objects in the environment and separate the sounds they make; Finally, I will talk about ObjectFolder—a multisensory object dataset with visual, acoustic, and tactile data, which offers a new path and testbed for multisensory learning in computer vision and robotics.

检测到您当前使用浏览器版本过于老旧,会导致无法正常浏览网站;请您使用电脑里的其他浏览器如:360、QQ、搜狗浏览器的速模式浏览,或者使用谷歌、火狐等浏览器。

下载Firefox

下载Firefox