Six papers from GSAI accepted by CCF A-category Conference ACL

Six papers by faculty and students from Gaoling School of Artificial Intelligence, Renmin University of China (GSAI), were recently accepted by Annual Meeting of the Association for Computational Linguistics (ACL). ACL is the most important top international conference in the field of computational linguistics and natural language processing, and it is a Category A international academic conference recommended by the China Computer Federation (CCF). ACL 2021 will be held in Bangkok, Thailand from August 1 to 6.

Since January 2021, GSAI has published (including those being accepted) 43 papers in CCF A-category international journals or conferences, 5 papers in CCF B-category journals and conferences. Among them, 46 papers have GSAI students or faculties listed as their first or corresponding authors.

Paper Introduction (ACL Main Conference)

Paper Title: Enabling Lightweight Fine-tuning for Pre-trained Language Model Compression based on Matrix Product Operators

Authors: Peiyu Liu, Zefeng Gao, Xin Zhao, Zhiyuan Xie, Zhongyi Lu, Jirong Wen

Corresponding Authors: Xin Zhao, Zhongyi Lu

Paper Overview: Based on the matrix product operator (MPO) representation method in quantum many-body physics problems, this paper proposes a novel pre-training language model compression method. MPO means that the weight matrix can be expressed as the product of the intermediate tensor (containing the main information) and the auxiliary tensor (containing very few parameters). Based on this, we use the MPO representation of the matrix to propose a novel fine-tuning strategy, which only needs to update the auxiliary tensor containing very few parameters to update the overall weight matrix. At the same time, we also designed a new optimization method to train the multi-layer network structure under the MPO table. In addition, the method proposed in this paper is universal. Whether it is the original model or the compressed model, it can greatly reduce the amount of parameters that need to be fine-tuned. The experiments in this article also illustrate the effectiveness of this method in model compression, and ultimately reduce the amount of parameters to be fine-tuned by an average of 91%.

Paper Introduction (ACL Main Conference)

Paper Title: A Joint Model for Dropped Pronoun Recovery and Conversational Discourse Parsing in Chinese Conversational Speech

Authors: Jingxuan Yang, Kerui Xu, Jun Xu, Si Li, Sheng Gao, Jun Guo, Nianwen Xue, Jirong Wen

Corresponding author: Jun Xu

Abstract:

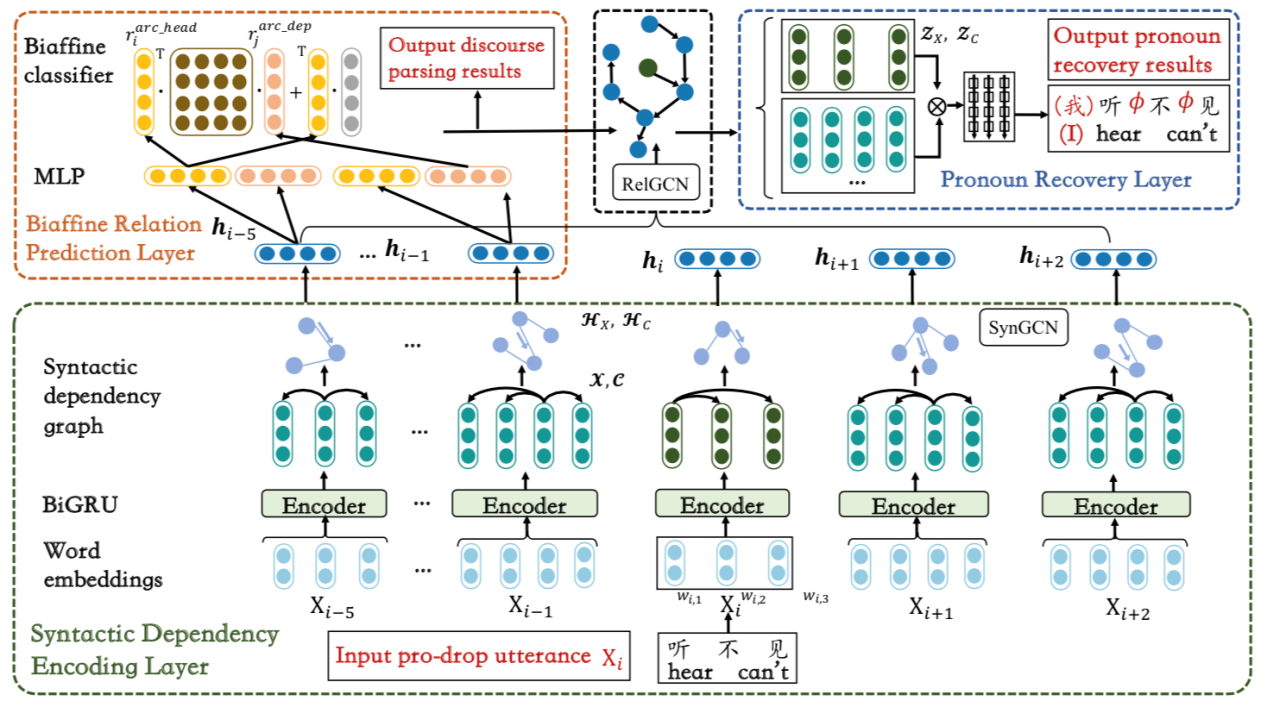

In this paper, we present a neural model for joint dropped pronoun recovery (DPR) and conversational discourse parsing (CDP) in Chinese conversational speech. We show that DPR and CDP are closely related, and a joint model benefits both tasks. We refer to our model as DiscProReco, and it first encodes the tokens in each utterance in a conversation with a directed Graph Convolutional Network (GCN). The token states for an utterance are then aggregated to produce a single state for each utterance. The utterance states are then fed into a biaffine classifier to construct a conversational discourse graph. A second (multi-relational) GCN is then applied to the utterance states to produce a discourse relation-augmented representation for the utterances, which are then fused together with token states in each utterance as input to a dropped pronoun recovery layer. The joint model is trained and evaluated on a new Structure Parsing-enhanced Dropped Pronoun Recovery (SPDPR) data set that we annotated with both two types of information. Experimental

results on the SPDPR dataset and other benchmarks show that DiscProReco significantly outperformed the state-of-the-art baselines of both tasks.

Paper Introduction (ACL Main Conference)

Paper Title: A Pre-training Strategy for Zero-Resource Response Selection in Knowledge-Grounded Conversations

Authors: Chongyang Tao, Changyu Chen, Jiazhan Feng, Jirong Wen, Rui Yan

Corresponding author: Rui Yan

Paper Overview: In recent years, many researches on search dialogue systems are focusing on how to effectively use background knowledge (such as documents, etc.) when talking with humans. However, it is not easy to collect large-scale data sets for dialogue based on specific background documents, which hinders us from effectively and adequately training the knowledge selection module and response selection module in the system. In order to overcome this challenge, we decompose the training of response selection based on knowledge into three tasks: 1) matching of query and document; 2) matching of question and dialogue history; 3) matching of responses in multiple rounds of dialogue, and unifying all these tasks in the pre-trained language model. The first two tasks can help the model to select and understand knowledge, while the last task allows the model to select an appropriate response after a given query and background knowledge (conversation history). In this way, the model can use ad-hoc retrieval data and a large amount of natural multi-round dialogue data to learn how to select relevant knowledge and appropriate responses. We have conducted experiments on two benchmarks based on knowledge to conduct dialogues. The results show that compared with the existing training methods based on crowdsourced data, this model can achieve a considerable improvement in effect.

Paper Introduction (ACL Findings)

Paper Title: Few-shot Knowledge Graph-to-Text Generation with Pretrained Language Models

Authors: Junyi Li, Tianyi Tang, Xin Zhao, Zhicheng Wei, Jing Yuan, Jirong Wen

Corresponding author: Xin Zhao

Paper Overview: This paper studies how to automatically generate natural language text describing the facts in the Knowledge Graph (KG). With the help of pre-trained language models (PLMs) in language understanding and generation, we mainly consider few-shot settings. We propose three main technical contributions, namely, representation alignment for bridging the semantic gap between KG encoding and PLM, relation-biased KG linearization strategy for generating better input representations, and multi-task learning for learning the correspondence between KG and text. Extensive experiments on three benchmark datasets have demonstrated the effectiveness of our model on KG-to-text generation task. In particular, our model outperforms all comparison methods on both fully-supervised and fewshot settings.

Paper Introduction (ACL Findings)

Paper Title: Leveraging Passage-Centric Similarity Relation for Improving Dense Passage Retrieval

Authors: Ruiyang Ren, Shangwen Lv, Yingqi Qu, Jing Liu, Xin Zhao, Qiaoqiao She, Hua Wu, Haifeng Wang, Jirong Wen

Corresponding Authors: Liu Jing, Zhao Xin

Paper Overview: Recently, dense passage retrieval has become a mainstream approach to finding relevant information in various natural language processing tasks. A number of studies have been devoted to improving the widely adopted dual-encoder architecture. However, most of the previous studies only consider query-centric similarity relation when learning the dual-encoder retriever. In order to capture more comprehensive similarity relations, we propose a novel approach that leverages both query-centric and PAssage-centric sImilarity Relations (called PAIR) for dense passage retrieval. To implement our approach, we make three major technical contributions by introducing formal formulations of the two kinds of similarity relations, generating high-quality pseudo labeled data via knowledge distillation, and designing an effective two-stage training procedure that incorporates passage-centric similarity relation constraint. Extensive experiments show that our approach significantly outperforms previous state-of-the-art models on both MSMARCO and Natural Questions datasets.

Paper Introduction (ACL Findings)

Paper Title: Enhancing the Open-Domain Dialogue Evaluation in Latent Space

Authors: Zhangming Chan, Lemao Liu, Juntao Li, Haisong Zhang, Dongyan Zhao, Shuming Shi, Rui Yan

Corresponding author: Rui Yan

Paper Overview: The "one-to-many" feature of open domain dialogue makes the design of its automatic evaluation method a huge challenge. Recent research attempts to solve this problem by directly considering the degree of matching between the generated replies and the context of the conversation, and using a discriminant model to learn from multiple positive samples. Despite the exciting progress made by such methods, they cannot be applied to training data that does not have multiple reasonable responses, which is the general case of real-world data sets. To this end, we propose a dialogue evaluation indicator enhanced through hidden space modeling-EMS. Specifically, we use self-supervised learning to obtain a smooth hidden space, which can not only extract the contextual information of the dialogue, but also model the possible reasonable responses to the context. Then we use the information captured in the hidden space to enhance the dialogue evaluation process. The experimental results on two real-world dialogue datasets prove the superiority of our method, in which the Pearson and Spearman correlation scores related to human judgment outperform all baseline models.